I have always been fascinated by the magical ability of photography to transport us back in time. I’m fortunate enough to have family albums dating back a couple of generations despite many photos lost due to war, disasters and pests. To save our remaining photos from destruction I’ve started the process of digitizing our many photo books so they can forever live in the cloud.

When I look at many of our black and white photos, I often wonder what they’d look like if they had the extra dimensionality of color. As a long time fan of Reddit’s colorization artists, who work wonders to bring color to old black and white photos, I wanted to see how close neural networks can come to replicating their amazing work.

Prior Work

The goal is to take one image and produce another image with desired visual characteristics, in our case bw-2-color. There has been a lot of work in this area using Generative Adversarial Networks. Applications go beyond colorization: image segmentation, satellite imagery to street maps, “painting” a photo with impressionist techniques, turning day images to night, converting drawings to object renderings, the list goes on.

2014 - Generative Adversarial Nets [paper]

2017 - Pix2Pix - Image-to-Image Translation with Conditional Adversarial Nets [website]

2017 - CycleGAN - Image to image translation without target data [website]

5⁄2018 - Google testing colorization for photos [news article]

11⁄2018 - DeOldify - Open-Source colorization of photos based on fast.ai [source code]

2⁄2019 - Colourise.sg - Colorization GAN trained on old Singaporean photos [blog post]

From trying out the current colorization solutions, I found that:

- Results are often faded, DeOldify’s author is working on training a DeFader

- There is no one-size-fits-all solution yet. The best results come from scenes the neural networks have seen before.

- While the quality of the results can vary, most of the time the results are plausible enough to bring out overwhelming nostalgia and awe.

Training the Artist and the Critic

I started with a Generative Adversial Network (GAN) since GANs have shown great successes in image-to-image translations. A GAN comprises of two networks: a generator responsible for predicting the color values in an image and a critic (discriminator) that judges how realistic the predicted color values are compared to the original image.

Here’s the architecture of the neural network I’ll refer to as ColorGAN:

Training a GAN switching back and forth between training the generator and the critic for many cycles. As the generator good enough to fool the critic, we raise the bar by traning the critic more. As you can work out, this process is very time and compute intensive.

To accelerate this process, I started with generator and critic with pretrained weights from ImageNet. I also trained the generator on its own using the Perceptual Loss function proposed by Johnson, Alahi, Li [paper] after learning about it the 2019 version of fast.ai’s Practical deep learning for coders.

Aside: I love that the fast.ai course and library integrates some of the latest and greatest research. I use fast.ai in this application because I’m eager to learn new techniques that they’ve incorporated. Living on the edge has its drawbacks: the fast.ai library can be unstable and requires you to dig deep into its internals to be effective. Even though it’s on version 1.0 it still feels like beta software. I’m definite grateful for all the hard work that went into this nifty library.

For my training data, I combined a subset of Google’s Open Images v4 with frames from movies and shows in the public domain. I selected photos and scenes that included human faces and natural landscapes so I expect the model to perform better on these subjects than still life photos.

I trained on a single SageMaker notebook instance (p3.xlarge) with a single NVIDIA® V100 GPU. It took about 12 hrs to get decent results with square 256x256 images.

Deploy

SageMaker offers inference endpoints that can scale automatically with a load balancer. To provision an endpoint, you can use prebuilt container images that works with models trained in MxNet, Tensorflow, and PyTorch or supply your own container hosted in AWS Elastic Container Registry (ECR). In order to use fastai for inference, I built my own docker container image based off of pytorch’s publicly available image on Docker Hub. Because SageMaker endpoints only permit authenticated request using AWS Signature v4, I built a serverless frontend using Lambda and API Gateway to allow controlled public access API to the color API. This process took some investigation and debugging so I’ll do a follow up post with step-by-step instructions soon.

You can see the architecture of this application below:

#Results

In its current state, ColorGAN creates good results 1⁄3 of the time. It does very well with human faces with shirts, skies, and mid-day natural scenes. It struggles with complex scenes and fashion, like its creator.

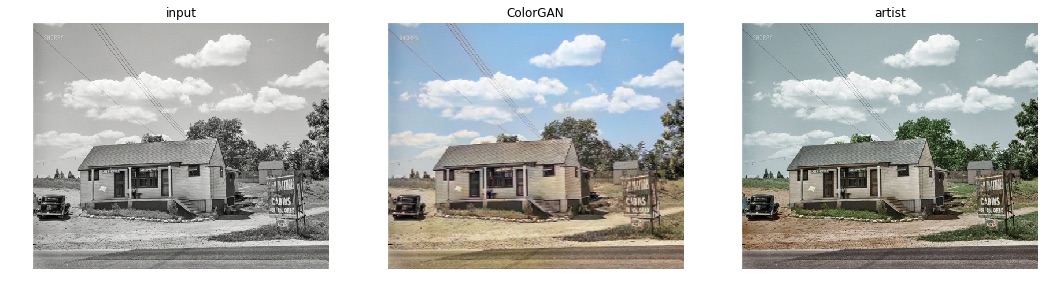

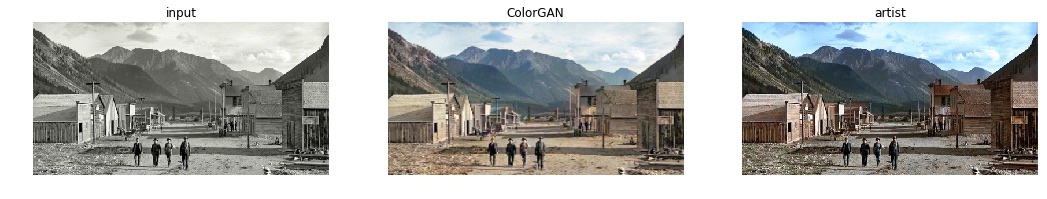

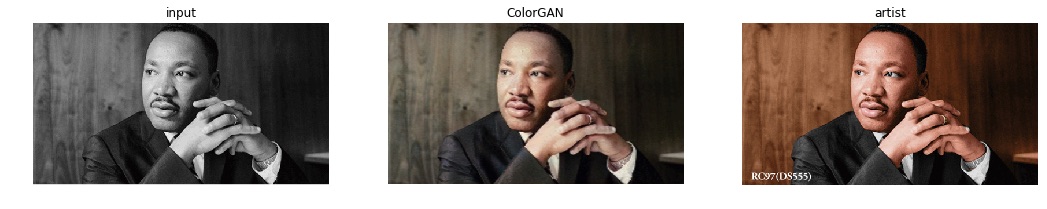

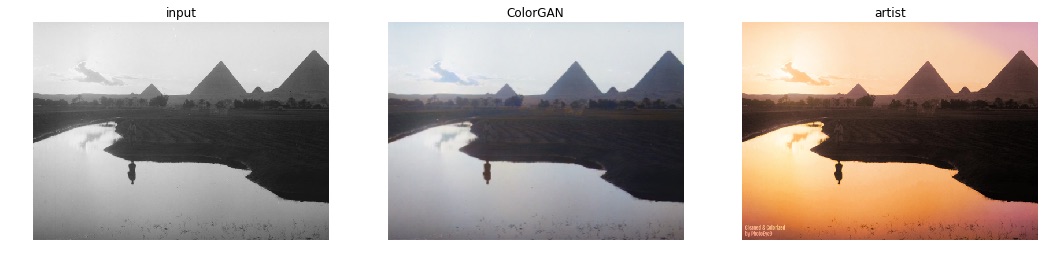

All of them test images below are from the Colorization subreddit so I can compare the ColorGAN results with human artists. I’ve linked back to the original posts in the captions.

The Good

Audrey Hepburn 1955 (Source). The shirt color is better on the human artist side, but I prefer the ColorGAN’s treament of the face. There are still some blotches of gray (near the ear) on the ColorGAN rendering.

Cabin (Source).

Colorado 1800s (Source). I prefer the warmer tones from the ColorGAN.

Rev. Martin Luther King Jr. (Source).

The Bad

Egypt (Source). Poor ColorGAN has not seen enough sunsets.

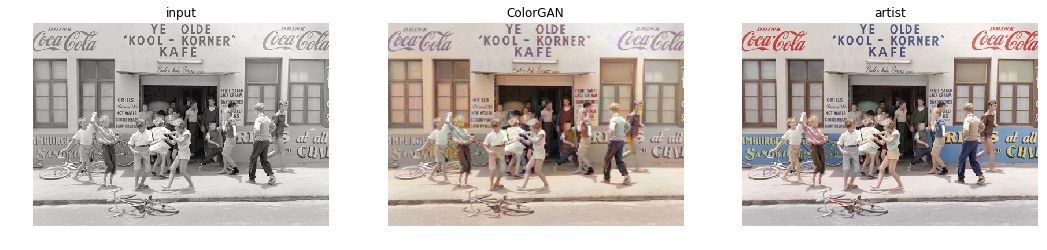

Kool Korner Kafe, Australia 1958 (Source). There’s a lot going on here and the ColorGAN has decided to optimize by muting all the colors. If you view the larger ColorGAN result, you can see a blotches of gray throughout.

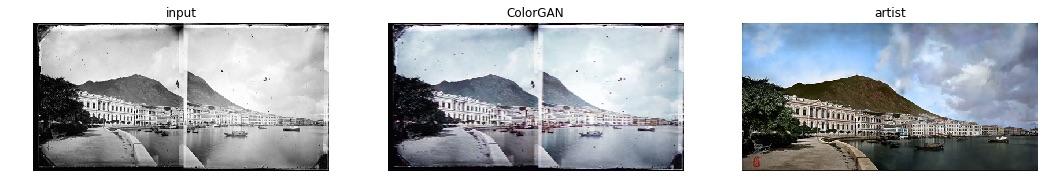

Hong Kong 1800s (Source). ColorGAN has opted for the muted colors. There’s a lot of work aside from colorization to restore this photo, ColorGAN needs to make friends with DeFadeGAN, amongst many others.

The Ugly

Jean-Marie Franceschi (Source). Large gray blotches throughout, skin tone is unatural. ColorGAN was trained on relatively low examples of human direct portraits such as this one. First path to imporvement would be augmenting the data set with different white balance settings to simulate different lighting condtions. I also can achieve better results through more iterations, trying some test time augmentation or tuning the loss function to better penalize blotchy results like these.

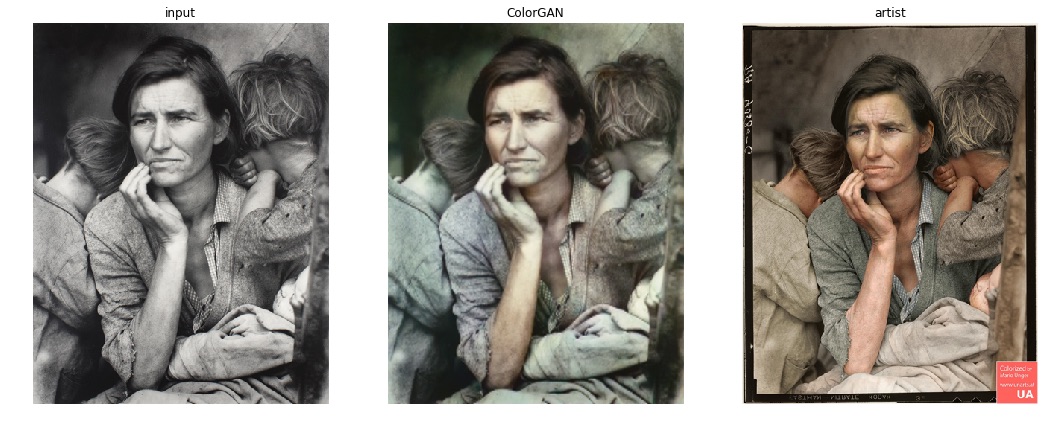

MIgrant Mother from Dorothea Lange (Source). The artist’s work blows even the best results from ColorGAN out of the water.

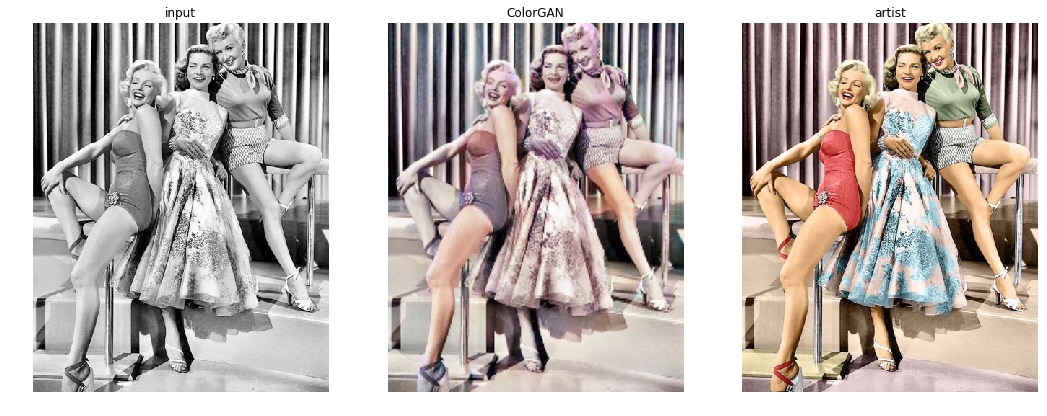

Marilyn Monroe, Lauren Bacall and Betty Grable (Source). ColorGAN is bad at glamour and fashion.

Nature

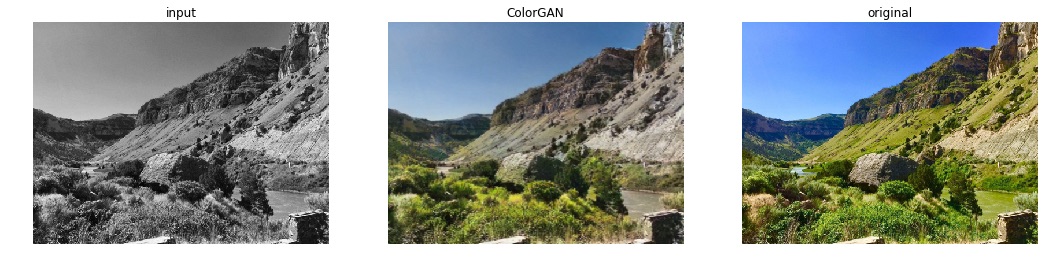

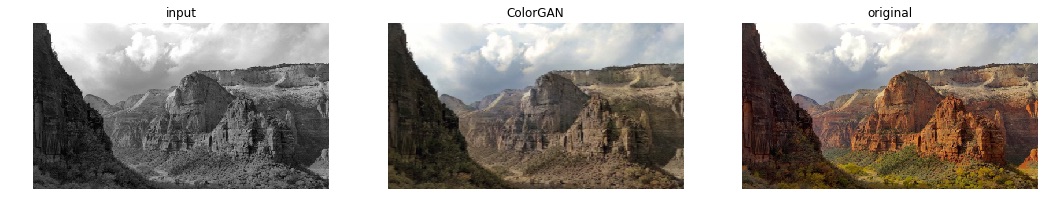

Wyoming (Source).

Zion (Soure)

Bird (Source)

Try it out

You can try out the colorization network here.

Future

For restoration of photos, the ColorGAN still has a long way to go to achieve the wow factor. What I would do next to improve its coloring performance are:

- Increase quantity and variety of training data. The current iteration (3.1.2019) was only trained with about 80,000 photos! Data augmentation here would help as well.

- Improve the loss function. Currently the generator relies too much on the Perceptual Loss function, this helps it achieve good results in a short amount time but ideally the GAN training process should help the GAN learn its own loss function. As Jantic mentioned on DeOldify this is how he gets good results.

The next goal for performance is:

- 80% of images generated can be classified in the “good” category above

- Much better performance on clothing

- Handle larger pictures

In addition to coloring, old photos require some defading and removal of imperfections. I can apply some fake distortion and aging effects to train the network to fill in the gaps.

Playing around with GANs have been an incredible experience. Their uncanny ability to do image-to-image generation gives us scalable superpowers such as adding colorization or generating maps from satellite imagery. Like many superpowers GANs have many controversial uses as well, such as creating realistic faces of non-existing people (source) or creating fake footage of influential people.

Typically after training, only the generator portion of the GAN is used to create content. But the critic can be very useful as well to distinguish between real and fake. I’m looking forward to having both generator and critic networks available for easy public consumption anyone can run tests against potentially fake online content.